A data warehouse brings together data from many different sources into a centralized repository, making it available for organizations to derive powerful analytical insights to make data-driven decisions. This central repository lets users join disparate data sets, slice and dice their data, and drill up or down at any level of detail to find insights on large volumes of data. While extremely powerful, this potential can lead organizations to make their data warehouse perform functions for which it was never designed.

Most data warehouses are analytical data warehouses designed for long-term storage and analysis on historical data. But more organizations want to use their freshest data for day-to-day decision-making. That requires a fundamentally different kind of data warehouse - an operational data warehouse - to support it.

In this article, we’ll explore what an operational data warehouse is, how it differs from a traditional data warehouse, and why you may need one.

Deciphering your data workloads - is it analytical or operational?

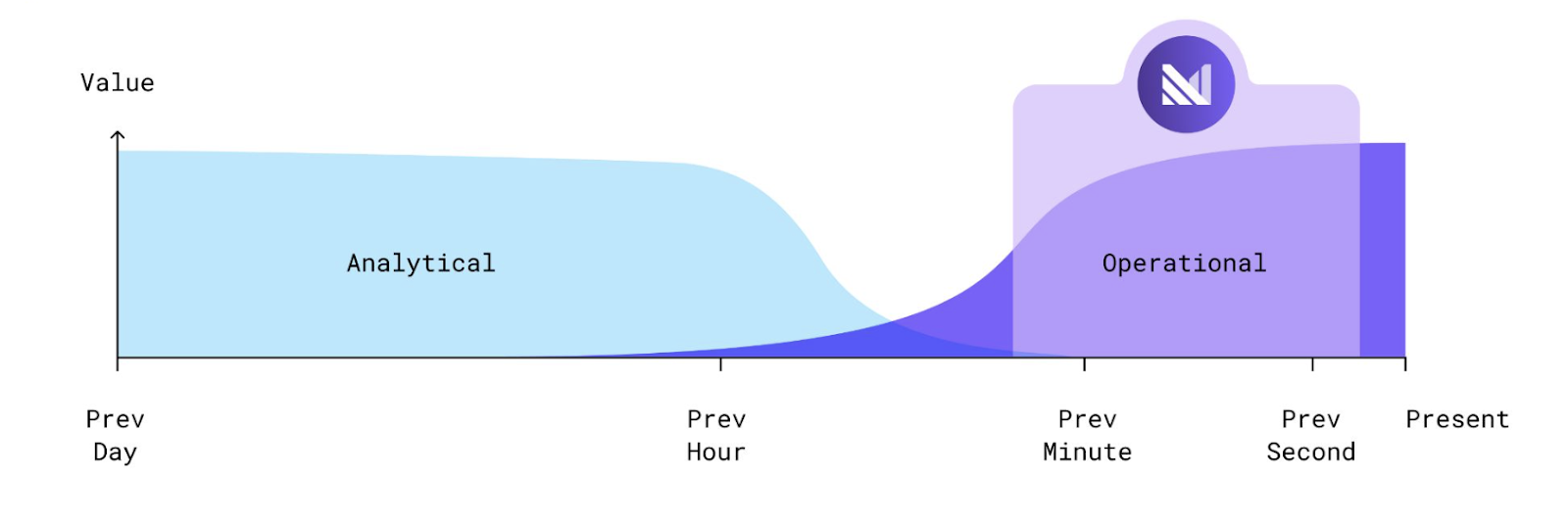

Broadly speaking, there are two different types of data workloads - analytical and operational. Analytical workloads are workloads where you are looking back into the data that has been collected in the past weeks, months, or perhaps years to help make data-driven decisions for your business. On the other end of the spectrum are operational workloads, which demand swift responses based on data that is arriving in real-time. Businesses will find that operational workloads are more valuable the fresher the data is; they cannot afford slow, stale, or incorrect data. For example, for a fraud detection use case, the ability to detect an account takeover immediately once it has occurred can save a financial services company tens of millions of dollars. Selecting the appropriate data warehouse depends on the type of workload you are trying to run.

Use an analytical data warehouse to grind through batched data

Traditional data warehouses are best suited for analytical data workloads, meaning they are optimized for the development and deployment of analytical queries that provide insight into data collected in the past. Large amounts of data from multiple different sources are collected and processed in batches as it is loaded into the data warehouse at scheduled intervals, such as hourly, daily, weekly, or monthly.

It is crucial that a system supporting analytics keeps the underlying data consistent so that all analytics are queried on a consistent source of truth. This consistency requirement means that analytical data warehouses are designed for static data used in large volumes to support a wide variety of SQL queries to answer different questions a business may have. A system optimized in this way is perfectly suited for exploratory analysis that digs through large volumes of historical data. This style of analysis can work with cached data and doesn’t have strict uptime requirements.

However, some data applications require that fresh data be streamed constantly without interruptions. Rather than an analytical warehouse optimized for long-term analysis, this requires an operational data warehouse, optimized for real-time data streaming, to drive decisions based on the freshest data available.

Use an operational data warehouse to serve real-time workloads

For operational work, organizations can’t afford slow, stale, or incorrect data. An operational data warehouse is designed to stream new data from across an organization and process it immediately. It provides interactive access to up-to-date data, automating actions on certain events, and generally reacting to things that are happening right now or have just happened. It sits upstream from the traditional data warehouse, connected to the data sources or ETL pipelines that feed into the analytical warehouse.

An operational data warehouse receives data as events happen, and can transform, normalize, and enrich the data as it lands. It can immediately act on the data, including updating materialized views and indexes, and informing downstream dependencies. By abstracting the streaming data internals, users can easily access the data by executing SQL queries against up-to-date data. The data can be replicated to an analytical data warehouse for longer-term storage and analysis, and when appropriate, gets retired from the operational data warehouse.

Essential pillars of an operational data warehouse

Operational workloads require you act on critical data the moment it changes. As a result, the operational data warehouse must be purpose-built to handle these workloads with trust, scale, and ease.

You have to trust your tools in order to automatically act on data the moment it changes. Using a single platform to manage all of your operational data means that you need that tool to scale with you as your data, use cases, or number of active users changes. And, you need to be able to implement, change, and maintain your operational use cases in a way that extends your team, rather than overwhelming them.

Trust

An operational data warehouse must be trusted to deliver the freshness, responsiveness, and consistency required for your business’ operational tasks. Data must be captured and updated as soon as events occur. It must also be consistent across access points, presenting answers that are correct, even as the data continues to change.

Scale

A data warehouse ingests data from disparate data sources, so it must scale up to handle both large volumes of data in motion as well as the client demand needed to perform operational work. It should also scale down when demand on the system ebbs.

Ease

No matter how complex its streaming internals, an operational data warehouse must provide an easy way for its clients to access its data. This is an Achilles heel of many existing streaming solutions, which require engineers to code brittle point-to-point solutions for specific endpoints to keep data up-to-date. Instead, teams should simply be able to use SQL, the de facto language for data processing, to describe views and business logic while trusting the warehouse to provide fresh and consistent results on data as it updates.

Use cases for an operational data warehouse

Since an operational data warehouse is built for fast changing streaming data, it benefits any use case that requires constantly up-to-date information.

One common example is an alert or notification system. Streaming data lets alert systems detect specific conditions and trigger notifications as soon as the data arrives in the data warehouse. This could be a security camera system alerting of unusual activity, fraud detection for a payment system, or providing real-time updates on the delivery status for customers of an online store.

Another typical scenario involves dynamic responses based on fast changing data. This could be a system that segments customers based on their current activity, or a dynamic pricing system that adjusts to market conditions, or a targeted advertising system that learns and adapts to real-time user searches.

Another common use case is automation. An operational data warehouse can promptly access new data as it arrives, allowing data pipelines to process data automatically. Systems like these can offload real-time work from traditional data warehouses that aren’t optimized for low latency response to drive fully automated workflows.

Every company needs an operational data warehouse

Companies often wind up using their analytical data warehouse to fulfill their operational needs. They “modernize” their data warehouse, bringing streaming infrastructure into the mix. At some point, however, this process starts to break down. Analytical data warehouses are built on a batch update and orchestrated query model that requires caching for efficient access. They are not designed to support the operational work that requires fast, consistent, and up-to-date data. As time wears on, engineering your way out of this problem gradually becomes complex and cost-prohibitive.

In contrast, an operational data warehouse is built from the ground up to serve real-time operational use cases. Data is ingested continuously, and the freshest data is immediately available to query. As a result, query workloads are proactively updated, rather than stale until re-run, and updated results are communicated to downstream systems that can react immediately.

As you evaluate your operational workloads, you should consider moving them from an analytical to an operational data warehouse if:

You need to act on data that is frequently changing. Batching data before ingesting it into an analytical data warehouse just isn’t gonna cut it. Your business relies on these real-time signals and missing them could impact your business.

You are constantly re-running the same workloads. You want your SQL queries to be consistent and get to the “current state” of data as it changes so you can act on it appropriately.

You are experiencing technical limitations with analytical data warehouses to keep up with operational requirements. You can either continue to throw money and resources to build a complex solution or leverage an operational warehouse that is designed from the ground up to service your time-sensitive use cases.

Materialize: The Operational Data Warehouse

Operational data warehouses provide a variety of benefits of working with real-time data. If it seems like an operational data warehouse is right for you, we welcome you to learn more about Materialize. Materialize combines the ease of use of a data warehouse with the speed of streaming to serve your real-time operational use cases with maximum efficiency. Try Materialize today to see how you can move beyond analytics and operate your business with data.